flower-dp

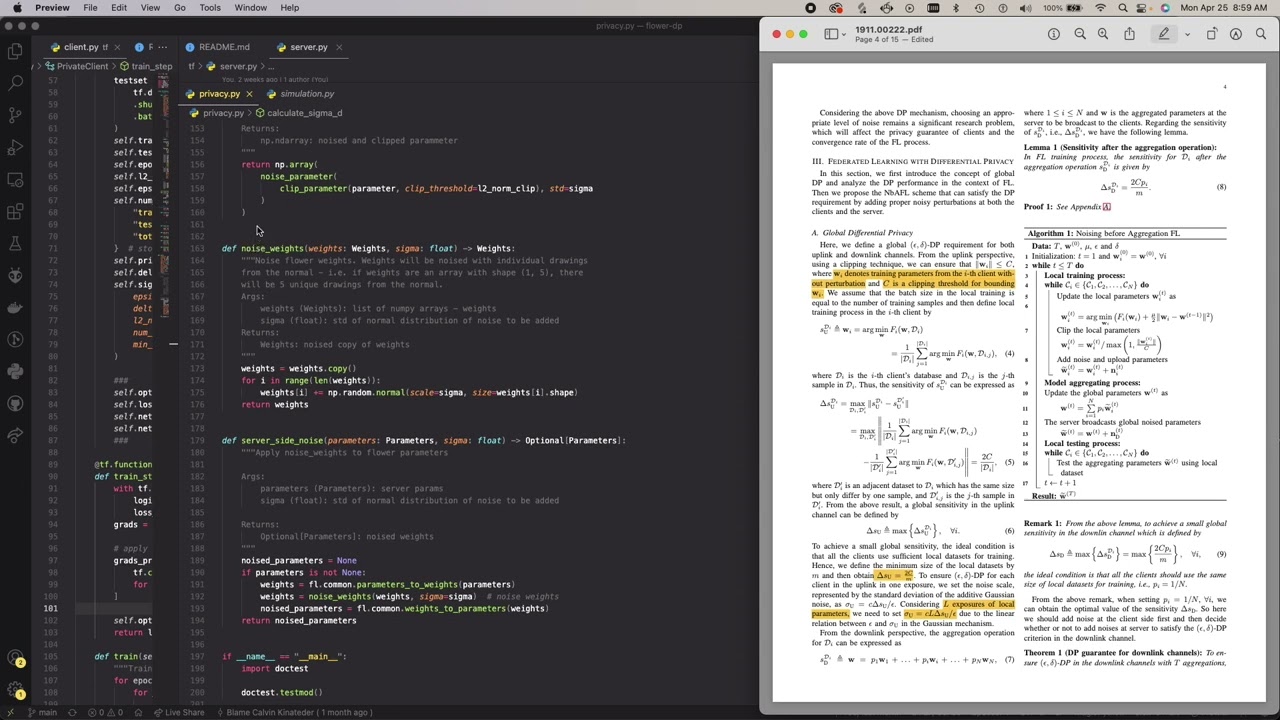

A custom (ε, δ)-DP implementation into the flower.dev federated learning framework. flower-dp utilizes both the noising before model aggregation FL (NbAFL) method, as well as noising during model aggregation.1 All the noising is implemented and shown within the code, rather than relying on an outside source. This decision was made considering the values of transparency, practical functionality, and abilty to adapt to other machine learning frameworks. While researching other frameworks, I found that most all of them were based around passing a “noise multipler” as a parameter and calculating ε (the privacy budget) using that multiplier and other parameters. One of the features that I wanted to center for this custom implementation was the ability to pass ε as a parameter. I think that being able to ensure ε up front rather than an arbitrary “noise multiplier” is very important. From a practical standpoint, it makes much more sense to be able to preemptively ensure a metric of privacy with real meaning.

Project based on the paper Federated Learning with Differential Privacy: Algorithms and Performance Analysis.

Documentation Overview and Walkthrough

</a>

A Quick Overview of Differential Privacy for Federated Learning

Imagine that you have two neighboring datasets x and y and randomisation mechanism M. Since they’re neighboring, x and y differ by one value. We can say that M is ε-differentially private if that, for every run of randomisation mechanism M(x), it’s about equally likely to see the same output for every neighboring dataset y, and this probabilty is set by ε. 2

Assume that

\(\large S\subseteq \mathrm{Range}(\mathcal M)\)

In other words, M preserves ε-DP if

\(\large P[\mathcal M (x) \in S] \le \exp(\epsilon) P[\mathcal M (y) \in S]\)

In our scenario, the “datasets” would be the weights of the model. So, we add a certain amount of noise to each gradient during gradient descent to ensure that specific users data cannot be extracted but the model can still learn. Because we’re adding to the gradients, we must bound them. We do this by clipping using the Euclidean (L2) norm. This is controlled by the parameter C or l2_norm_clip.

δ is the probability of information being accidentially leaked (0 ≤ δ ≤ 1). This value is proportional to the size of the dataset. Typically we’d like to see values of δ that are less than the inverse of the size of the dataset. For example, if the training dataset was 20000 rows, δ ≤ 1 / 20000. To include this in the general formula,

\(\color{black}\large P[\mathcal M (x) \in S] \le \exp(\epsilon) P[\mathcal M (y) \in S] %2b \delta\)

Getting Started

Note that this has been tested on UNIX systems.

To install clone the repo and cd into the directory.

git clone https://github.com/ckinateder/flower-dp.git

cd flower-dp

To install the packages, you can use virtualenv (for a lighter setup) or Docker (recommended).

virtualenv

To setup with a virtual environment, use virtualenv.

virtualenv env

source env/bin/activate

pip3 install -r requirements.txt

Docker

To build and run the docker image

docker build -t flower-dp:latest .

docker run --rm -it -v `pwd`:`pwd` -w `pwd` --gpus all --network host flower-dp:latest bash

Further Documentation

See the README in tf/ and pytorch/ for details specific to each framework.

Links

Frameworks

Material for Future Reference

<!– Latex generated from https://editor.codecogs.com/>